Ever wonder if changing a button's color from blue to green could actually get you more sales? Or if a different headline might convince more people to sign up for your newsletter? Instead of just guessing, you can find out for sure. That's where A/B testing comes in.

At its core, A/B testing is a straightforward way to compare two versions of something—a webpage, an email, a popup—to see which one performs better. You show Version A (the original) to one group of users and Version B (the new version) to another. Then, you simply measure which one got you closer to your goal. It’s that simple.

The Core Concept Behind A/B Testing

Think of it like an eye exam. The optometrist flips between two lenses and asks, "Which is better, one or two?" By comparing them directly, you can easily tell which one helps you see clearly. A/B testing, often called split testing, does the exact same thing for your website.

Instead of relying on gut feelings or what you think your audience wants, you let their actions give you the real answer. This simple but powerful idea pulls personal opinion out of your marketing decisions. It replaces "I think this will work" with "I know this works because the data proves it."

The Key Components of Any Test

Every A/B test, no matter how big or small, is built on a few essential parts. Getting these terms down is the first step to running experiments that deliver clear, actionable results.

Let’s break down the building blocks of any solid A/B test. Understanding these components is key to setting up an experiment that gives you reliable data.

| Component | Description | Example |

|---|---|---|

| The Control (Version A) | Your original, unchanged version. This is the baseline you'll measure everything against—the "lens one" in our eye exam analogy. | Your current homepage with its blue "Sign Up" button. |

| The Variation (Version B) | The modified version you're testing against the control. The trick is to change only one significant element at a time. | The same homepage, but with a green "Sign Up" button. |

| The Goal Metric | The specific, measurable outcome you're trying to improve. This defines what "winning" actually looks like for your test. | The number of people who click the "Sign Up" button (click-through rate). |

This structured approach turns a vague idea like "let's improve the homepage" into a testable hypothesis. For instance, you might hypothesize that changing your "Buy Now" button from blue to green will increase clicks because green psychologically suggests "go." An A/B test is how you prove (or disprove) that idea.

A Method Rooted in Scientific History

While it feels like a modern digital marketing tactic, the idea behind A/B testing is nothing new. Its roots are in the randomized controlled experiments developed by statistician Ronald Fisher back in the 1920s to test agricultural methods. This scientific approach was later adopted for clinical trials and eventually found its way into direct mail marketing in the 1960s. You can explore a brief history on A/B testing to see just how deep these roots go.

By isolating a single variable and measuring its impact on a specific outcome, you can determine with statistical confidence whether a change produces a positive, negative, or neutral result. This is the essence of data-driven optimization.

Ultimately, A/B testing is all about learning. Every test—whether it’s a huge win or a total flop—tells you something valuable about what makes your audience tick. Over time, these small, incremental insights add up, leading to major improvements in user experience and conversion rates. That's what makes it a cornerstone of any effective digital strategy.

Why A/B Testing Drives Business Growth

Knowing what A/B testing is is one thing, but understanding its direct impact on your bottom line is where the magic really happens. The most successful companies don't see split testing as a party trick; it's a core part of their strategy. It’s a reliable engine for sustainable, data-backed growth that replaces guesswork with genuine certainty.

This goes way beyond just tweaking button colors. It’s a disciplined approach where small, validated improvements stack up over time. By consistently testing and implementing the winning versions, you can create a serious lift in your most important KPIs—from conversion rates and user engagement all the way to revenue.

Turning Small Wins into Major Momentum

The real beauty of A/B testing lies in its incremental power. A 1% bump in your conversion rate might not sound like a game-changer on its own, but when you string those small wins together, they add up to massive growth over the year. Every single test is a learning opportunity, refining your website into a more effective sales and marketing tool with each experiment.

Imagine testing a new headline on a product page that leads to a 5% increase in add-to-cart clicks. Awesome. A few weeks later, you test a new product image and see another 3% lift. These validated changes build on each other, creating a powerful cumulative effect you'd never achieve by just going with your gut. For a closer look at this process, check out the key benefits of A/B testing for data-driven companies.

Mitigating Risk Before Major Launches

Let's be real: launching a completely redesigned homepage or a brand-new feature is a huge investment of time, money, and energy. And what if it flops? A/B testing acts as your safety net, allowing you to de-risk these big moves by proving their value on a smaller scale first.

Instead of pushing a new design live to 100% of your audience and crossing your fingers, you can test it against the original with just a small segment—say, 10% of your traffic. If the new version bombs, you’ve dodged a costly mistake that could have tanked your metrics. But if it wins, you can roll it out with the confidence that it’s already a proven success.

A/B testing transforms high-stakes gambles into calculated decisions. It provides the evidence needed to invest in big changes, ensuring that your development and design efforts are focused on initiatives that are guaranteed to move the needle.

Unlocking Invaluable Customer Insights

Beyond just boosting metrics, every A/B test offers a peek inside your customers' minds. It's a direct line to understanding their psychology—what motivates them, what language resonates, and what causes them to hesitate.

- Discover what language connects: Does a direct, benefit-driven headline work better than a clever, witty one? Your audience's clicks will give you the answer.

- Identify visual preferences: Do your visitors respond more to professional stock photos or authentic, user-generated content? A simple test can reveal what builds the most trust.

- Understand offer appeal: Is a "20% Off" discount more compelling than a "Free Shipping" offer? Split testing can show you which promotion actually drives more sales.

These insights are gold. They don't just improve the single element you're testing; they inform your entire marketing strategy, from ad copy and email campaigns to future product development. You can learn more about how to boost your conversion rate with Divi A/B testing by applying these principles directly to your site.

Ultimately, A/B testing turns your website from a static brochure into a dynamic laboratory for understanding—and serving—your customers better than ever before.

Finding Your Best A/B Testing Opportunities

Once you grasp the power of A/B testing, the next big question is always, "Okay… but what should I actually test?" The sheer number of options can feel paralyzing. The secret is to ignore the random tweaks and focus on changes that have the greatest potential to influence user behavior and, ultimately, your bottom line.

Think of it like a mechanic tuning a high-performance engine. They don’t just start turning random screws. They zero in on the parts that directly impact performance—the fuel intake, the spark plugs, the timing. Your testing strategy should be just as focused, concentrating on the website elements that most directly affect your conversions, engagement, and revenue.

High-Impact Elements to Start Testing

Every website has a handful of critical components that carry the most weight in a visitor's decision-making process. These are the perfect places to start your A/B testing journey because even small improvements can deliver significant results.

Here are some of the best candidates for your first experiments:

Headlines and Subheadings: Your headline is the first thing almost everyone reads. A simple test can reveal whether a benefit-driven headline ("Get More Leads in 5 Minutes") crushes a feature-focused one ("Our Software Has Automated Workflows").

Call-to-Action (CTA) Buttons: The words, color, size, and placement of your CTAs are mission-critical. Try testing direct language like "Get Started" against something softer like "Explore Our Plans." I've seen a simple color change from gray to a vibrant orange lift clicks by double-digit percentages.

Images and Videos: The visuals on your page aren't just decoration; they build trust and communicate value instantly. You could test a professional stock photo against a candid team picture or see if adding a short product demo video increases time on page.

Your goal is not just to find a "winner" but to understand why it won. A successful test on a CTA button doesn't just improve one page; it gives you a valuable insight into the kind of language and design that motivates your entire audience.

Testing Dynamic Content with Divi Areas Pro

Modern websites are so much more than static pages. Dynamic elements like popups, announcement bars, and fly-ins are powerful tools for grabbing attention and driving action. With a tool like Divi Areas Pro, you can easily A/B test these components to squeeze every last drop of performance out of them, all without touching a line of code.

For example, imagine you create two different exit-intent popups for users who are about to leave your site:

- Version A: Offers a 15% discount.

- Version B: Offers free shipping on their first order instead.

By running this test, you'll learn exactly which incentive is more compelling for your hesitant buyers. The same logic applies to testing different headlines on an announcement bar ("Limited Time Offer" vs. "Save 20% Today") or varying the trigger for a slide-in promotion. This is how you fine-tune every interactive touchpoint on your site.

Prioritizing Your Tests for Maximum Results

With a notebook full of ideas, it's crucial to prioritize. Not all tests are created equal. You should always focus on pages with high traffic but low conversion rates—these are your biggest opportunities for growth.

Think about it: a 5% improvement on your homepage or main product page will have a much larger business impact than a 20% improvement on a blog post that gets a handful of visits each month.

To help you brainstorm, I've put together a table of common testing ideas organized by website element. Use this as a starting point to generate your own experiments.

A/B Testing Ideas by Website Element

| Website Element | Variable to Test (Version A vs. Version B) | Potential Impact Metric |

|---|---|---|

| Homepage Hero Section | A headline focused on features vs. a headline focused on benefits. | Bounce Rate, Clicks to Product Pages |

| Product Page CTA | "Add to Cart" button text vs. "Buy Now" button text. | Add to Cart Rate, Conversion Rate |

| Pricing Page | Displaying annual pricing first vs. displaying monthly pricing first. | Sign-ups, Average Order Value |

| Lead Generation Form | A short form (email only) vs. a longer form (name, email, company). | Form Submissions, Lead Quality |

| Blog Post Layout | A single-column layout vs. a layout with a sidebar. | Time on Page, Scroll Depth |

By systematically testing these high-leverage elements, you'll start gathering data-backed insights that do more than just optimize individual pages. You'll build a deep understanding of your audience that informs your entire marketing strategy, leading to smarter decisions and more consistent growth.

Your Step-By-Step Guide to Running an A/B Test

Now that you know what to test, let's walk through exactly how to do it. Turning your ideas into a real experiment might sound intimidating, but it’s actually a straightforward process once you break it down. Think of this guide as your roadmap to launching your first A/B test and gathering data that actually means something.

A successful test is built on a solid foundation. You can’t just change a button color on a whim and hope for the best; you need a clear goal and a good reason for making the change. A disciplined approach ensures your results are meaningful and that every experiment—win or lose—teaches you something valuable about your audience.

Step 1: Identify a Clear Goal

Before you touch a single pixel, you have to define what victory looks like. What specific metric are you trying to move the needle on? A fuzzy goal like "get more engagement" is impossible to measure. You need to get specific with a concrete, quantifiable outcome.

For instance, your goal could be:

- Increasing the click-through rate on your main call-to-action button.

- Reducing the bounce rate on a key landing page.

- Boosting form submissions for your newsletter signup.

- Improving the add-to-cart rate on a popular product page.

Choosing one primary metric keeps your test focused and makes it dead simple to interpret the results.

Step 2: Form a Strong Hypothesis

A hypothesis isn't just a random guess. It's an educated assumption about why a specific change will lead to the outcome you want. It connects the dots between your proposed change and an expected result, giving your test purpose. This is what separates strategic testing from just throwing spaghetti at the wall.

Use this simple but powerful template to frame your hypothesis:

By changing [The Thing You're Testing], we expect to see [Your Desired Outcome] because [The Reason You Think It Will Work].

For example: "By changing our CTA button text from 'Learn More' to 'Get Your Free Trial,' we expect sign-ups to increase because the new text is more specific and instantly communicates value."

Step 3: Create Your Variation

With a clear hypothesis in hand, it’s time to build your "Version B." This is the modified version of your page that you'll test against the original (the "control"). The golden rule here is to only change one significant element at a time. If you change the headline, the button color, and the main image all at once, you’ll have no idea which change actually made a difference.

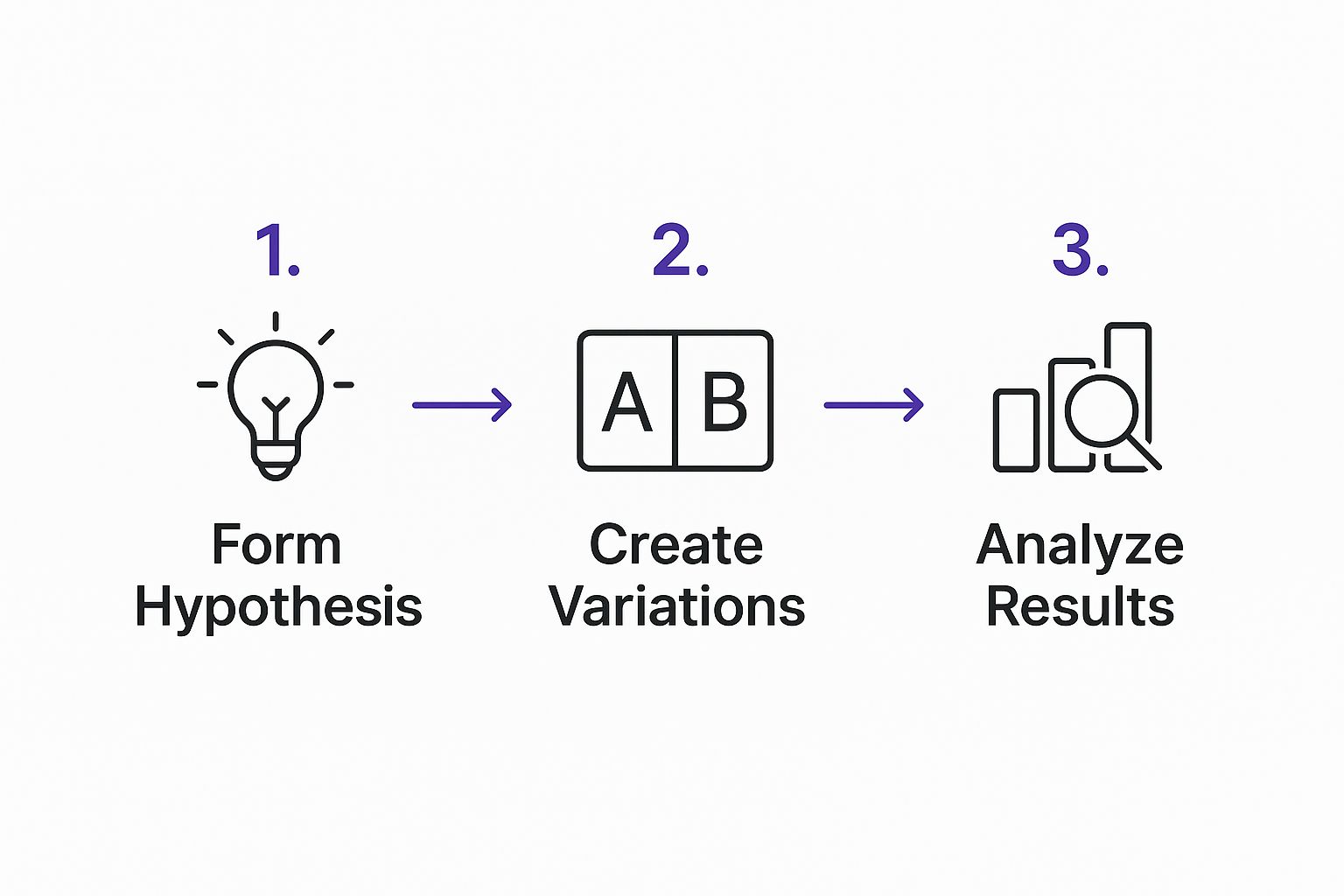

The infographic below breaks down this logical flow, from forming an idea to analyzing the outcome.

This visualizes the core A/B testing workflow, showing how a structured idea leads to a measurable result.

Step 4: Set Up and Run the Experiment

Now for the fun part. It's time to use your A/B testing tool to set up the experiment. You'll define your control and variation pages, specify your goal, and decide how to split traffic between the two versions (a 50/50 split is almost always the best way to go).

Once everything is configured, launch the test and let it run. This is where patience comes in. It's crucial to let the test collect enough data to achieve statistical significance. Ending a test too early is one of the most common mistakes people make, and it often leads to making decisions based on random chance. Trust the process.

Step 5: Analyze the Results

After the test has run its course, it's time to dig into the data. Your testing tool will show you how the control and the variation performed against the goal you set. Look at the numbers, but more importantly, try to understand the story they're telling. Did your new version win, lose, or make no real difference?

By 2025, A/B testing has become a non-negotiable strategy for top digital companies. Google, which ran its first test way back in 2000, now conducts over 10,000 tests a year. One of their most famous experiments involved testing 50 different shades of blue for a button, proving that even tiny tweaks can have a massive impact on user behavior.

No matter the outcome, document what you learned. A "losing" test is still a win because it teaches you what doesn't work for your audience, saving you from rolling out a bad change across your entire site. These learnings are gold and will inform your next great idea. For more inspiration, check out these 5 A/B tests to run on your Divi website.

Making Sense of Your A/B Test Results

The test is over, traffic has been split, and your tool is brimming with data. So, what's next? This is where you learn to read the story your results are telling you. Honestly, interpreting the outcome is just as crucial as setting up the test properly in the first place—it's what guides your next move.

You can't just glance at the numbers and declare a winner. A variation that gets a few more clicks after only a couple of hours might just be lucky. To make decisions you can actually stand behind, you need to get comfortable with one of the most important ideas in A/B testing: statistical significance.

The Power of Statistical Significance

Think of it like flipping a coin. If you flip it 10 times and get 7 heads, you wouldn't immediately claim the coin is biased. It could just be random chance. But if you flip it a thousand times and get 700 heads, you can be much more confident that something is truly different about that coin.

Statistical significance works the same way for your website traffic. It’s a mathematical way of measuring confidence, telling you whether your results are due to the changes you made or just random luck. Without it, you’re just guessing.

The whole point of A/B testing is to figure out if the differences you see are actually meaningful. This is where the p-value comes in, which is just a fancy way of saying "the probability that the difference between your control and variant happened by chance."

The industry standard for a p-value is below 0.05. This means there's less than a 5% probability that your result is a fluke.

This chart shows how confidence in a test result grows as you collect more data, ironing out the random ups and downs.

As your sample size gets bigger, the margin of error shrinks, giving you a much clearer picture of how each version is really performing.

Interpreting the Three Possible Outcomes

Once your test hits statistical significance, your results will fall into one of three buckets. Each one teaches you something valuable about your audience.

A Clear Winner: This is what we all hope for. Your variation (Version B) smashed the control (Version A) on your primary goal. For example, your new green button got a 15% higher click-through rate with 98% statistical confidence. The next step is a no-brainer: roll out the winning version and document what you learned for next time.

A Clear Loser: Your variation performed significantly worse than the control. It might sting a bit, but this is actually a huge win. You’ve just saved yourself from rolling out a change that would have tanked your conversions. This result is just as valuable because it teaches you what doesn't work for your audience.

An Inconclusive Result: Sometimes, a test ends with no statistically significant difference between the two versions. This isn't a failure; it’s an insight. It tells you that the element you changed didn't really move the needle on user behavior. That's great information—it helps you stop wasting time on things that don't matter and focus your next tests on elements that do.

Every test, regardless of the outcome, is a learning opportunity. A winning test improves your metrics, while a losing or inconclusive test improves your understanding of your customers.

When you dig into your data, looking at A/B variant engagement metrics can also give you great insights that go beyond simple conversion rates. By analyzing your results, you'll get much better at making data-driven decisions that consistently improve your site. And if you're looking for more ways to act on these findings, check out our guide with other powerful tips to boost your WordPress conversion rate.

Common A/B Testing Mistakes (And How to Dodge Them)

It's easy to run an A/B test. It's much harder to run a good one. Even with the best intentions, a few simple mistakes can completely torpedo your results, leading you to make bad decisions with a false sense of confidence. Frankly, a flawed test is worse than no test at all.

Think of it like a science experiment in the classroom. If you don't control the variables, your results are just noise. The same discipline applies here—getting reliable insights from your A/B tests is non-negotiable.

Mistake 1: Testing Too Many Things at Once

It’s tempting, right? You want to see a big lift, so you decide to change the headline, swap out the main image, and give that call-to-action button a vibrant new color, all in one go. You launch the test, and boom—the new version is a winner!

But… why? Was it the catchy headline? The new hero image? The button color? You'll never know.

This "throw everything at the wall" approach is actually a different kind of test called a multivariate test. While it has its place, if you want clear, actionable feedback, you have to stick to changing just one significant element at a time. This isolates the variable and tells you exactly what moved the needle.

- How to Dodge It: Before you start, ask yourself one simple question: "What am I really trying to learn here?" If your hypothesis is about button text, then only change the button text. That laser focus is what delivers a clean, easy-to-understand result.

Mistake 2: Calling the Winner Too Early

You launch a test, and after a day or two, one version is already pulling ahead. The excitement is real, and the urge to declare a winner and move on is powerful. Resist that urge. This is probably the single most common—and most damaging—mistake in A/B testing.

Early results are often just random statistical noise. They don't reflect how your real audience behaves over time.

User behavior changes. It's different on a Monday morning than it is on a Saturday night. A test has to run long enough to smooth out those peaks and valleys and capture a truly representative sample of your visitors.

Ending a test prematurely is like judging a marathon based on the first 100 meters. You need to let the experiment run its full course to see which version truly has the stamina to win.

- How to Dodge It: Let your test run for at least one full business cycle, which is usually one to two weeks. More importantly, don't stop until your testing tool confirms you've hit statistical significance—typically a confidence level of 95% or higher. This is your proof that the results are real, not just a fluke.

Mistake 3: Ignoring the World Outside Your Website

Your website doesn't operate in a bubble. Major events can send tidal waves through your traffic and user behavior, and if you're not paying attention, they can completely skew your test results.

Imagine running a test on an e-commerce site during Black Friday. The results you get will be wildly different from a normal Tuesday in April, right? Other factors that can throw a wrench in your data include:

Holidays: Major holidays dramatically alter traffic patterns and buying habits.

Marketing Campaigns: A new Google Ads campaign, a viral tweet, or a big email blast can flood your site with a very specific, and possibly atypical, type of visitor.

PR Events: A feature in a major publication or a bit of negative press can temporarily change who is visiting your site and why.

How to Dodge It: Always be aware of your own marketing calendar and major external events. If something unexpected happens mid-test, like a massive referral spike from an unplanned source, consider pausing the experiment or letting it run longer to see if the data normalizes. Always, always analyze your results in the context of what's happening in the real world.

Got Questions About A/B Testing?

As you get ready to jump in and run your own experiments, a few practical questions almost always pop up. Let's walk through the most common ones so you can move forward with confidence and sidestep the usual roadblocks on your way to making smarter, data-driven decisions.

How Long Should an A/B Test Run?

This is the big one, and the answer isn't a simple number of days. The right duration for your test really boils down to two things: your website traffic and hitting statistical significance. A high-traffic site might get enough data in a week, while a smaller one could need a month or more to get a reliable result.

Instead of just picking a date on the calendar, your goal is to let the experiment run until you've collected enough data to trust the outcome. Most A/B testing tools aim for a 95% confidence level before they'll declare a winner. It's also a good idea to run any test for at least one full week to account for the natural ups and downs of traffic—think weekend visitors versus weekday browsers.

The golden rule here is to run your test until it reaches statistical significance, not until a certain date arrives. Stopping a test too early is one of the biggest mistakes you can make, as you might end up making a decision based on random chance instead of what your users actually prefer.

What Is the Difference Between A/B and Multivariate Testing?

While they sound similar, A/B and multivariate testing are designed for different jobs. Here’s a simple way to think about it:

A/B Testing is like a head-to-head duel. You pit one version against another (a blue button vs. a green one) to find a single, clear winner. It's perfect when you have a focused hypothesis and want to test a bold change.

Multivariate Testing is more like a complex tournament. You test multiple changes all at once—say, two different headlines, three images, and two button colors—to discover which combination of elements performs best. This approach is way more complex and requires a ton of traffic to work.

For most websites, A/B testing is the best place to start. It’s simpler, the insights are clearer, and you get actionable results much faster.

Can A/B Testing Hurt My SEO?

This is a totally valid concern, but the short answer is no, as long as you follow a few best practices straight from Google. Search engines actually understand and encourage A/B testing because it’s a great way to improve user experience.

To keep your tests SEO-friendly, you just need to do two things. First, use a rel="canonical" tag on your variation pages that points back to the original URL. This tells search engines, "Hey, this isn't duplicate content, it's just a test." Second, use a noindex tag on test pages so they don't get indexed by mistake. The good news? Most reputable A/B testing tools handle all of this for you automatically.

What Are Some Good A/B Testing Tools?

There are plenty of excellent tools out there that let you get started without needing to be a developer. Some of the most popular and effective options include:

- Google Optimize: A free and surprisingly powerful tool that plugs right into Google Analytics.

- Optimizely: A feature-rich platform built for enterprise-level testing.

- VWO (Visual Website Optimizer): An all-in-one optimization suite that's a big hit with marketers.

- Divi Leads: The A/B testing feature built right into the Divi theme, which makes it incredibly simple for Divi users to start split testing page elements.

The right tool for you really depends on your budget, how comfortable you are with the tech, and what you're trying to achieve.

Ready to stop guessing and start making data-backed improvements to your Divi site? Divimode gives you the tools and expert guidance you need. With Divi Areas Pro, you can easily A/B test popups, announcement bars, and other dynamic content to see what truly captures your audience's attention and drives them to act.

Discover how Divi Areas Pro can transform your website at divimode.com